ASCII To Binary

The ASCII to Binary Converter tool allows you to convert ASCII text into binary code, providing an accurate and instant translation of your input. This tool is perfect for developers, students, and anyone working with digital data who needs to encode ASCII text into binary format. The process is straightforward and efficient, ensuring precise conversions every time.

Share on Social Media:

Best ASCII to Binary Converter Guide: Simple & Effective Solutions

ASCII to binary conversion translates text characters into a form computers understand. This guide explains the process, offers manual conversion methods, and reviews tools to make it easier.

Key Takeaways

ASCII serves as a standardized method for encoding keystrokes into numerical values, ensuring consistency across devices, while binary is the foundational language of computers, converting these codes into a series of 0s and 1s.

Automated ASCII to binary conversion tools, like PagesTools.com, offer efficient and accurate methods for converting text to binary, saving time and reducing human error.

Understanding manual conversion from ASCII to binary, and vice versa, provides deeper insight into the mechanics of digital encoding, despite the convenience and efficiency of automated tools.

1 Understanding ASCII and Binary

Computer languages may initially seem like a series of inscrutable lines of code, but fundamentally, they’re all about translation. ASCII, which stands for American Standard Code for Information Interchange, is the Rosetta Stone of the computing world. It’s the standardized method that encodes each keystroke as a numerical value, ensuring consistency across devices and platforms. So where does binary code fit into this? Binary is the foundational language of computers—every command, every character, and every operation boils down to binary bytes, a series of 0s and 1s.

While ASCII takes the vast array of text characters—from the letter ‘A’ to the obscure punctuation marks—and assigns each a unique ascii code, binary translates these codes into a form that computers can comprehend and act upon. It’s a partnership of efficiency: ASCII ensures that text is universally understood, while binary enables the machine to process and store that text as binary data. By converting ASCII to binary, we bridge the human-machine communication gap, allowing for the accurate processing and exchange of digital information.

ASCII To Binary by PagesTools.com

Discovering the right tools can be like finding hidden treasure, and in the realm of digital data, the ASCII to Binary Converter by PagesTools.com is a gem not to be overlooked. This tool stands out as a beacon of efficiency for developers, students, and anyone knee-deep in digital data who needs to encode ASCII text into the binary format swiftly. With PagesTools.com’s converter, the process is as straightforward as it is efficient; no need to pore over tables or perform mental gymnastics to understand your data in binary form.

Before we can fully appreciate the capabilities of this tool, we need to understand the fundamentals of ASCII and binary code. Understanding the core of these two systems is essential for anyone looking to convert between them, whether for academic curiosity or professional necessity. So, fasten your seatbelt as we unpack the intricacies of these digital alphabets and set the stage for seamless conversion.

What is ASCII?

In its simplest form, ASCII serves as a dictionary for computers, translating human readable characters into machine-understandable numbers. Born out of a need for consistency in the early days of computing, ASCII—American Standard Code for Information Interchange—became the bedrock for text representation across various systems. Every letter you type, every number, and even every control command you issue, has an ASCII value, a specific number assigned to it.

These values are not just random; they’re carefully mapped out in an ASCII table, creating a structured language that computers can interpret. Each ASCII character, from the lowercase ‘a’ to the capital letter ‘Z’, is represented using 7-bit or 8-bit binary codes, neatly fitting within the confines of a byte. This brilliant ASCII encoding standard has proven to be a linchpin in digital communication, ensuring that text data is universally understood and properly displayed, whether it’s on web pages or within text files.

What is Binary Code?

Delving deeper into the digital world reveals binary code, the ones and zeroes that form the heartbeat of all computer systems. It’s a simple yet powerful binary system where each bit—each binary digit—can be a 0 or a 1, representing an off or on state in a circuit. This is the fundamental way computers process all data, from the text on this screen to the vast computations required for complex simulations.

Binary’s simplicity is its strength. With just two digits, binary code can encode an astonishing array of information. Each binary number, each string of bits, can represent a different command or piece of data, a digital DNA that gives life to the virtual world. When you strip away the graphics and the interfaces, what remains is binary—raw, unadulterated data that is the true language of computing. And it’s this language that ASCII must be translated into for computers to perform their magic.

Manual Conversion of ASCII to Binary

Despite the age of automation, understanding the manual conversion of ASCII to binary retains a certain charm and utility. It’s like learning to navigate using the stars in a world accustomed to GPS. Manual conversion requires basic mathematics and a good old ASCII table, but it rewards you with a fundamental grasp of how digital encoding works. The process starts with identifying the ASCII decimal value of a character and then using base-2 conversion to transform it into binary—a journey from a human-readable symbol to a binary byte.

While this manual approach may seem outdated compared to modern tools, it offers invaluable insight into the mechanics of character encoding. It’s like learning to build a clock from scratch; you gain a deeper appreciation for the intricate workings of timekeeping. Similarly, by manually converting ASCII characters to binary, you develop a stronger understanding of the underlying principles that govern digital data.

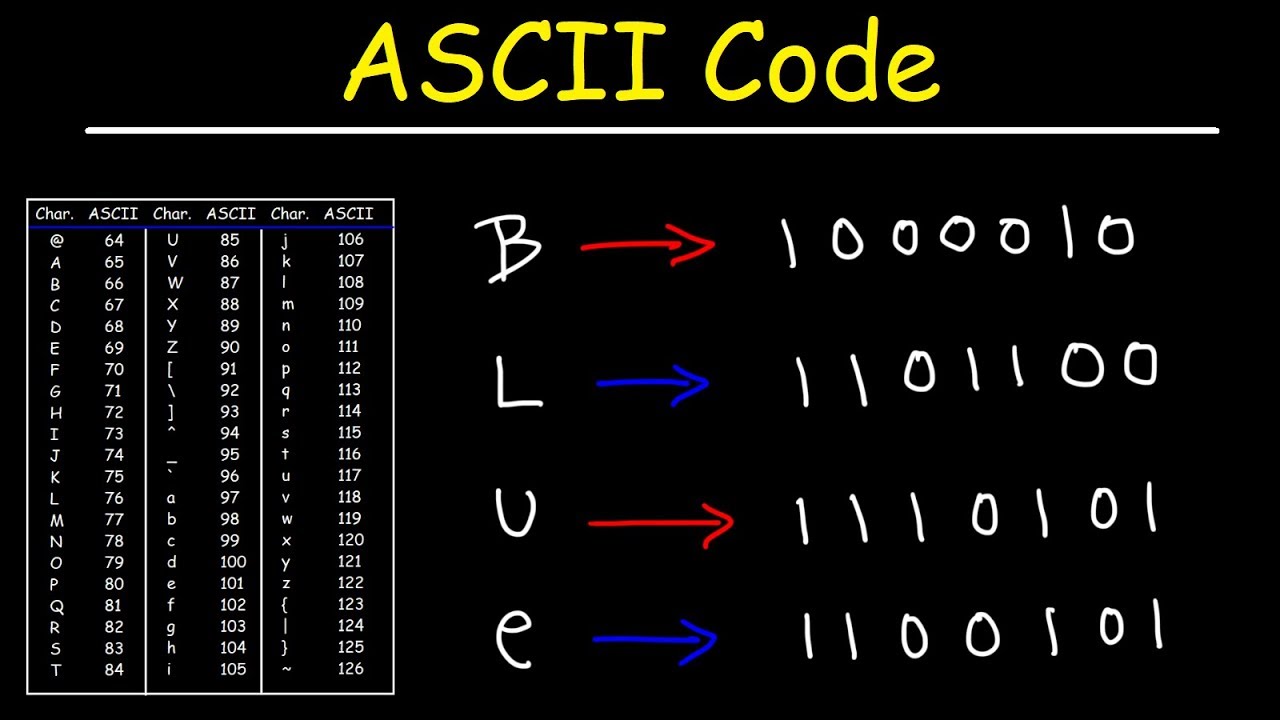

Using ASCII Table for Conversion

An ASCII table functions as a secret decoder ring in the digital age. It’s a chart that maps each character to its corresponding decimal and binary values, serving as a critical reference point for manual conversion. With an ASCII table at your disposal, you can find the binary equivalent of any character by simply locating its decimal value and converting that value to binary. It’s a straightforward process: look up, convert, and you have your binary code.

Whether you’re a programmer needing to understand the data you’re working with, or a hobbyist interested in the inner workings of computers, using an ASCII table is a fundamental skill. It’s like knowing the periodic table in chemistry; it provides a solid foundation from which to build more complex knowledge. And just as importantly, it allows you to manually convert binary when technology is not at hand or when you need to verify the accuracy of automated tools.

Example: Converting "A" to Binary

Now, let’s apply this theory in a common example: converting the letter “A” to binary. The decimal ASCII code for ‘A’ is 65, a number that may seem arbitrary but is part of the precise structure of the ASCII system. To translate this into the language of computers, we employ base-2 conversion, dividing by two and recording the remainders until we reach zero. The resultant binary string for ‘A’ is ‘1000001’.

But wait—computers love consistency, and they prefer to work with full bytes. So, we add a leading zero to our binary string to create an 8-bit binary number: ‘01000001’. This is the binary equivalent of the ASCII code for ‘A’, and it’s how your computer interprets each keystroke of this letter.

By manually converting ASCII to binary, we can see the transformation of a simple keystroke into the binary data that computers can process and store.

Automated ASCII to Binary Conversion Tools

Although manual conversion provides a solid foundation, modern challenges necessitate modern solutions - introducing automated ASCII to binary conversion tools. These online gems are the calculators of the digital age, designed to effortlessly convert text to binary at the click of a button. Say goodbye to the painstaking process of flipping through ASCII tables and manually converting each character. These tools do the heavy lifting for you, transforming individual characters into their binary equivalents and compiling them into a binary file or string.

Automated converters come in various flavors, with user-friendly interfaces that cater to both the novice and the tech-savvy. Some even offer batch processing capabilities, allowing for the simultaneous conversion of multiple ASCII characters to binary, which can be a massive time-saver when working with large volumes of data. The advancements in these tools reflect the ongoing evolution of technology, making complex tasks more accessible and less error-prone.

How to Use Online Converters

Using an online ASCII to binary converter is incredibly straightforward. Here’s how to do it:

Enter your ASCII string into the text box provided on the converter’s webpage.

With some tools, you can even specify the character encoding type and choose an output delimiter to format the binary output to your liking.

Once you’re all set, hit the convert button and watch as the converter outputs your text in binary format, often in real-time as you type.

Convenience is king with these online tools; many offer a one-click option to copy the binary result, making it easy to transfer the data wherever it needs to go. It’s a bit like having a bilingual friend who instantly translates your spoken words into another language. This ease of use not only saves precious time but also ensures that you can focus on more complex tasks at hand, confident that the conversion is being handled accurately and efficiently.

Benefits of Using Automated Tools

The benefits of using automated tools for ASCII to binary conversion are quite evident. They offer a modern approach that significantly reduces the time and effort required for conversion, freeing you up to tackle other aspects of your project. The magic of automation lies in its ability to process multiple characters in a blink, ensuring that even large blocks of text are converted quickly and accurately.

Accuracy is another hallmark of these tools, as they eliminate the human error factor inherent in manual conversion. This is crucial in fields where precision is paramount, such as in programming or data analysis. On top of that, the convenience of these tools cannot be overstated—they allow you to effortlessly convert ASCII text to binary with just a few clicks, making what was once a tedious task nothing more than a simple routine.

ASCII Characters and Binary Numbers System

Exploring the interplay between ASCII characters and the binary number system reveals the simple elegance of digital text representation. Each ASCII character, from the humble space to the complex ‘@’ symbol, is assigned a unique binary code, enabling it to be stored, manipulated, and displayed by our digital devices. It’s a language of efficiency, where every keystroke has a distinct binary equivalent that computers can easily process.

The ASCII standard, with its 7-bit codes, provides enough combinations to cover the basic English alphabet, numbers, and various control characters. However, the digital domain is ever-expanding, and with it, so too is the need for a richer set of characters. This is where extended ASCII comes into play, offering an 8-bit binary code system that includes additional characters for a total of 256 possible symbols.

ASCII to Binary Conversion Table

An ASCII to binary numbers conversion table is an essential tool that simplifies the task of encoding text into binary. It’s a straightforward but powerful reference that allows you to convert multiple ASCII codes to binary at once. This is crucial for data storage, as text files on our computers are stored as binary on the hard drive. With a conversion table, the process of translating ASCII to binary becomes a matter of simple look-up and conversion.

Whether you’re encoding a single character or an entire manuscript, the conversion table is your trusty sidekick. It’s the master key that unlocks the binary form of any text character, ensuring that the data you work with is accurately represented in the digital landscape. It’s not just about conversion; it’s about ensuring the integrity and longevity of the information we create and share.

Extended ASCII and Special Characters

Moving beyond the standard ASCII set introduces us to the domain of extended ASCII and special characters. Extended ASCII, with its 8-bit binary codes, broadens the horizon, encompassing a wider array of symbols, including those used in various languages and special graphic characters. This expanded set is particularly important for global communication, where different alphabets and symbols come into play.

Automated converters shine when dealing with extended ASCII, as they often include functionalities to handle these additional encodings and special characters, ensuring that no character is left behind in the conversion process. These tools are akin to universal translators, adept at converting even the most obscure characters into binary, making them indispensable in a world where digital communication transcends borders and languages.

Practical Applications of ASCII to Binary Conversion

ASCII to binary conversion has numerous and diverse practical applications, threading through every aspect of our digital lives. It’s the cornerstone of digital representation and storage, ensuring that every piece of text data—from your favorite ebook to a simple email—is stored in a format that computing systems can handle efficiently. This conversion is not just about turning text into a string of 0s and 1s; it’s about enabling the seamless flow of information between systems, whether it’s across the room or across the globe.

In the world of embedded systems, where resources are at a premium, ASCII to binary conversion ensures that characters are displayed correctly on everything from your microwave’s LCD screen to the dashboard of your car. It’s a process that underpins the functionality of countless devices, ensuring that the interface you interact with is responsive and accurate. From the perspective of an engineer or developer, mastering this conversion process is akin to holding the key to digital clarity.

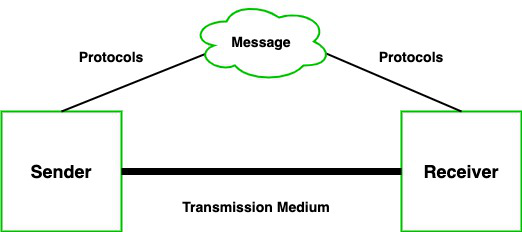

Data Transmission

The importance of ASCII to binary conversion in data transmission is immense. It’s the linchpin that ensures data is encoded in the most efficient way possible for transmission. When sending text data over networks, it’s crucial that the information is compact and comprehensible for the receiving system. Converting ASCII to binary is how we achieve this, creating a binary file or stream that can be transmitted quickly and accurately.

Communication protocols, the rules that dictate how data is transmitted, often rely on binary data to function. By converting text to binary, we ensure that information can be interpreted and analyzed correctly at the destination. It’s like sending a coded message that only the recipient can decode; in this case, the recipient is the computer or device that receives the binary data and translates it back into human-readable text.

Programming and Software Development

In programming and software development, ASCII to binary conversion is a standard procedure. Programmers often find themselves needing to convert binary data back to ASCII for debugging or processing purposes. It’s a vital step in the development process, helping to ensure that programs run smoothly and that data is presented in a way that’s meaningful to the user. Whether it’s sorting text, searching for patterns, or encrypting information, ASCII to binary conversion is at the heart of efficient text data handling.

For example, in Python, the int function can transform a binary string to an integer, which can then be converted to an ASCII character using the chr function. This interplay between binary and text is a common occurrence in the world of coding, where understanding and manipulating data at the lowest level can be crucial to the success of a project.

In C programming, bitwise operations and loops are utilized to process each ASCII character independently. This enables the conversion of the character to its binary form. This demonstrates the importance of conversion in the foundational aspects of computing and the development of software that we rely on every day.

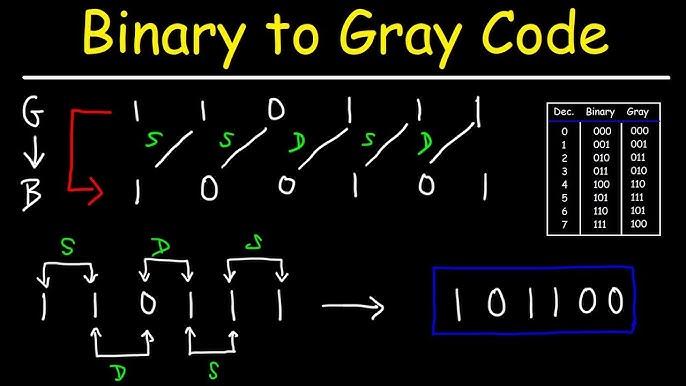

Binary to ASCII Conversion

Just like ASCII can be converted to binary, the reverse - binary to ASCII conversion - is equally critical. This is the magic that brings data back into a form we can understand, turning the binary bytes that computers love back into the characters and words that humans use. This process is essential for displaying binary data as text, whether it’s the contents of a file, a message received over a network, or debugging output from a program.

The conversion from binary to ASCII is like reverse engineering a puzzle; it involves interpreting a string of binary digits as a sequence of bits, then mapping each 8-bit segment back to the corresponding ASCII character. By doing this, we can reconstruct the original text from its binary representation, ensuring that the information isn’t lost in translation between the digital and human worlds.

Steps to Convert Binary to ASCII

The process of converting binary to ASCII follows a logical and methodical sequence. Here are the steps to convert binary to ASCII:

Divide the binary string into 8-bit chunks, as each chunk represents one ASCII character.

Convert each 8-bit binary chunk into its decimal equivalent using base-2 conversion.

Refer to an ASCII table to find the corresponding ASCII character for each decimal value.

This process of base-2 conversion is the heart of binary to ASCII translation, as it enables us to move from a binary byte back to a decimal value that can be referenced in an ASCII table.

Once you have the decimal value, you can easily find the corresponding ASCII character by referencing an ASCII table. It’s a simple but crucial step that brings the binary data back into the realm of human-readable text. Whether done manually or with the help of automated tools, these steps ensure that the binary data is accurately converted back to ASCII, maintaining the integrity of the original text data.

Example: Converting Binary to "Hello"

Let’s illustrate this with a practical example by converting the binary string 01001000 01100101 01101100 01101100 01101111 back to the word “Hello”. Each 8-bit segment here represents a different character in the word, and it’s our task to decode them. The first segment ‘01001000’ converts to the decimal value of 72, which corresponds to the letter ‘H’ in the ASCII table. By repeating this process for each segment, we can gradually reconstruct the entire word.

As we convert each binary segment to ASCII and concatenate the characters, the familiar greeting emerges from the string of binary digits. It’s a perfect illustration of how binary data can hold the essence of human communication, and with the right knowledge, we can unlock its meaning. This example encapsulates the entire process of binary to ASCII conversion, demonstrating how we can retrieve human-readable text from its binary form.

Summary

As we wrap up our exploration of ASCII to binary conversion and vice versa, it’s clear that this process is more than a mere technical exercise. It’s a fundamental aspect of digital communication, a bridge between the world of human language and the binary realm of computing. Through this guide, we’ve uncovered the simplicity behind the complexity, demystifying the conversion process and revealing the practical applications that impact our everyday digital interactions.

Feel empowered by the knowledge that whether you’re manually converting ASCII characters using a conversion table or leveraging the efficiency of automated tools, you’re engaging with the very language of computers. Cherish the clarity that comes with understanding how the keystrokes you take for granted are transformed into the binary code that powers our digital world. And remember, with the right tools and knowledge, you can effortlessly navigate the vast landscape of digital data, confident in your ability to convert and understand it in all its forms.

Frequently Asked Questions

What is the difference between ASCII and binary code?

The difference between ASCII and binary code is that ASCII is a character encoding standard that assigns a numeric value to each text character, while binary code is the language of computers that uses 0s and 1s to represent data or instructions. In computers, ASCII characters are represented using binary codes.

Why do we need to convert ASCII to binary?

Converting ASCII to binary is necessary for computers to process and store text data because computers operate using binary language, enabling them to understand and manipulate human-readable text.

Can I convert special characters and symbols using ASCII to binary converters?

Yes, ASCII to binary converters can handle the conversion of special characters and symbols, especially those supporting extended ASCII. You can use them to convert these characters effectively.

How do automated ASCII to binary conversion tools work?

Automated ASCII to binary conversion tools work by converting each character into its ASCII decimal value and then translating these values into binary. They offer a user-friendly interface with options for batch processing and formatting.

Is it necessary to understand manual conversion if there are automated tools available?

Yes, it is necessary to understand manual conversion because it provides a deeper comprehension of character encoding and can be useful in situations where automated tools are not available or when verifying the accuracy of conversions.